MEDIA PROVENANCE

DECaDE researchers study the decentralised economy through the lens of the creative industries – among the earliest sectors to transition to a decentralised model of content creation and consumption.

Mainstream social media platforms such as Facebook, X, or Instagram are controlled and rely on a single service provider, resulting in users of these ‘centralised’ platforms giving providers significant trust in controlling their data. In this creative supply chain, the issues of media provenance are paramount.

Along with the incredible advances in generative AI, it is vital that people are able to make informed decisions about the authenticity of content. DECaDE has contributed tools to trace provenance facts, such as who made an image and how, to help users make those trust decisions.

DECaDE’s research collaboration with Adobe and the Content Authenticity Initiative has helped accelerate the pace of innovation in media provenance technology. We’re excited to continue to work together on authenticity and value creation as we bring the creative economy of the future to life.”

Andy Parsons Sr. Dir. Content Authenticity Initiative, Adobe.Research Highlights

Helping to fight fake news

- Contributed to the Coalition for Content Provenance and Authenticity (C2PA) a cross standards group for media provenance. The team have combined AI and Distributed Ledger Technology to solve the key weakness of metadata; that it is easily stripped from its assets including by all social media platforms.

- Developed fingerprinting and invisible watermarking algorithms, in collaboration with the Adobe-led- Content Authenticity Initiative, to help match content stripped of its metadata.

- Co-developed TrustMark watermarking technology with Adobe, which has been open sourced under MIT Licence enabling commercial use to help drive adoption. The same technology can also be used to trace where our AI data is trained on, so that we can ensure it is safe.

DECaDE explores establishing provenance for generative content.

The requirement for artists to be recognised and rewarded for the reuse of their intellectual property is fundamental to the creative economy. This is especially prominent with the emergence of Generative AI.

Our researchers have developed the Ekila system which helps trace the training images most responsible for a Generative AI image. It then uses Non-Fungible Tokens (NFT) to trace the owner of those responsible images. Ekila extends NFTs to help creatives assert usage rights over their images and as a mechanism for issuing micropayments for different uses, including GenAI training. We refer to this extension as ORA (Ownership-Rights-Attribution)

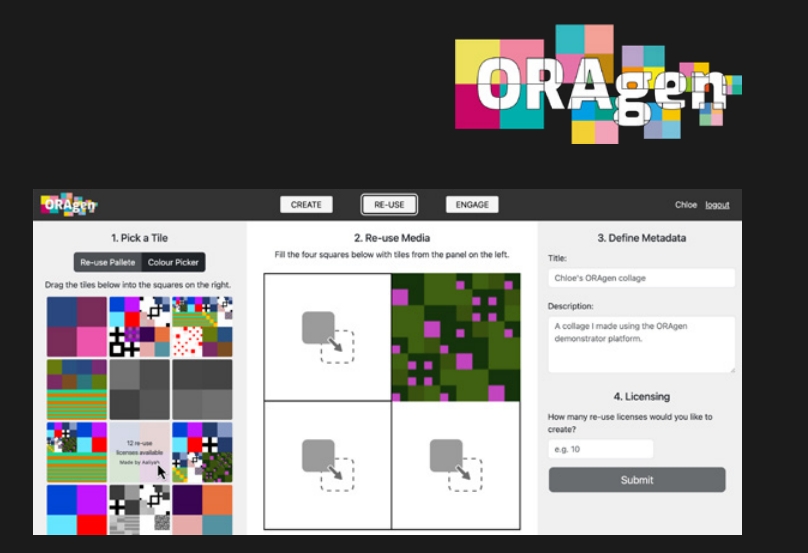

ORA-Gen – a new attribution and rights management tool

- independently prove ownership of their digital assets and associated licenses

- embed metadata including provenance data in a way that cannot be easily stripped or taken away

- design and issue specific and bespoke licenses for their digital creations.